404: Connection Not Found

A dive into conversational AI and how AI, combined with strong, unique narratives can help us feel more connected

Positron is a blog that covers topics related to technology, business, society, and everything in between that glues it all together. In this post, I discuss conversational AI and how it can potentially be used to curb loneliness.

Photo by Toa Heftiba on Unsplash

Feeling Disconnected

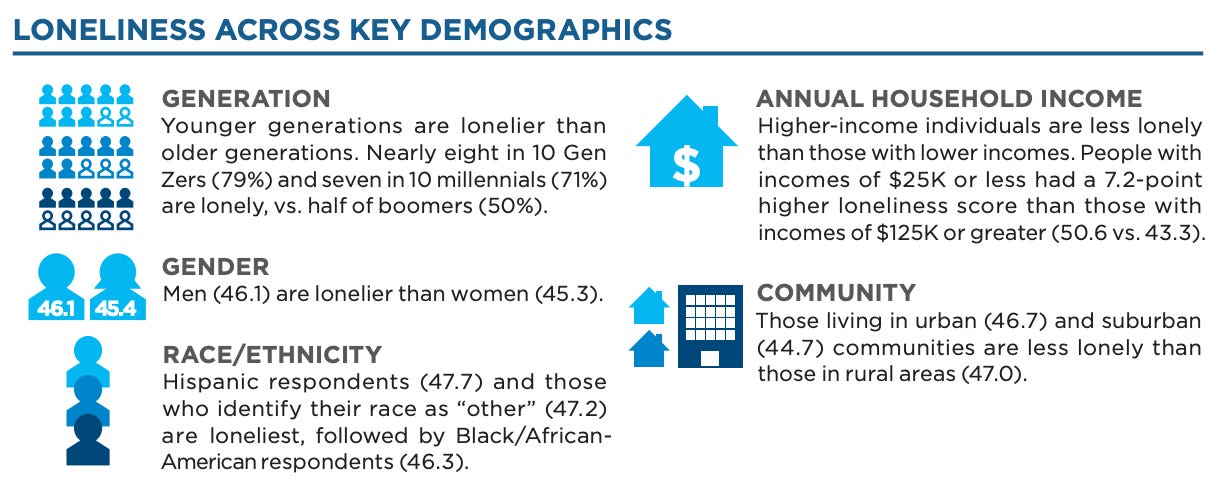

For what seems like many years now, especially in the aftermath of the Covid-19 pandemic, a common theme I hear repeated both online and among various social circles is that people are finding it harder and harder to form a genuine connection with others. In the US alone, nearly 8 in 10 of Gen Z and 5 in 10 Baby Boomers report feelings of loneliness.

Loneliness In The U.S

Source: Cigna

In times such as this, where going out and meeting new people is infeasible for many, it is well worth exploring conversational AI as a way to fill the void such an environment creates in the hearts of many.

What Is Connection, Really?

Looking at what’s available in this space today, it is clear that conversational AI in the traditional sense is still very much not mainstream.

The most ubiquitous instances of conversational AI available to use today take the form of the various assistant AI’s such as the Google Assistant or Amazon Alexa. The “conversations” we have with these assistants lack the qualities needed to form genuine connections as for the most part they are entirely and explicitly one-sided and transactional. Anecdotally, the most common use case for such assistants is to answer the occasional fleeting questions that come to mind when we’re away from our mobile devices, or to control an IoT device in our living spaces. The assistant may crack the occasional joke, but only when asked to, of course.

Despite this, if we look at more niche applications of conversational AI, we start to find AI that is explicitly meant to be more “human” and conversational. For example, the service Replika lets users create a personalized AI that attempts to match the user’s own personality overtime.

While the AI is quite advanced, it does not accurately reflect the experience of connecting with another person in daily life.

The serendipity of meeting someone, getting to know them, and, overtime, forming a connection with them also comes with the possibility of meeting someone and not being compatible at all. The fact that things could have gone wrong, but they didn’t, make the new connection feel that much more special. With services like Replika, we talk with someone who is supposed to like us, someone who is supposed to be interested in what we say and who we are.

Replika

Source: Replika

When explaining the concept, I often compare it to the difference between our parents telling us we are pretty or handsome vs hearing it from a peer, especially from those whom we seek to favor us. Yes, we acknowledge that our parents’ feelings are real; however, that feeling of fulfillment, the feeling of being seen and validated by someone without a bias for or against you, is missing from the experience.

From that perspective, the conversations we have with a Replika, much like with the home assistants, are one sided as the Replika does not necessarily have the agency to disagree with or render a judgment about us that may be considered negative. And, to go a step further, I would posit that much of the connection users feel when using a service like Replika is really just the user finding connection within themselves. Questions such as “How are you feeling?” and “What are your goals?”, or more profound, existential questions such as “What do you think is the meaning of life?” do not necessarily allow us to connect with the AI itself. However, we may feel an emotional response to these questions simply because we do not often stop to think about the answer to those questions in our daily lives where these topics are taboo to speak on in a casual setting, and are often only discussed with close friends or romantic partners. Using Replika, in that sense, is akin to using a mindfulness meditation app like Headspace, as it provides us with the space to look within an introspect in a way that feels a little less like explicit introspection.

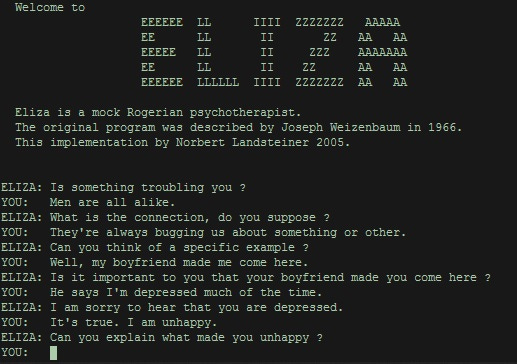

In the mid 1960s, Joseph Weizenbaum created ELIZA (aptly named after Eliza Dolittle), an early rendition of a chatbot that Weizenbaum specifically designed to highlight the superficiality of the connection between man and machine. It operated by running a script (DOCTOR) which generated output from input by determining key words in the input queries and utilizing the same key words in the output responses, many of which took the form of a question. Essentially, Eliza would spit what you said to it back at you in a way that prompts introspection and deeper thought, which in turn could evoke an emotional response from the user.

Eliza, Computer Therapist

Replika, in many ways, is a modern day ELIZA, pulling at our emotions in order to foster a feeling of “connection” between us and our Replika, while in reality we are essentially only taking some (important) time for self reflection.

Transformers, Roll Out

In spite of the fact that no such service exists today that truly approximates the serendipity of meeting someone new, I do think we are rapidly approaching a world in which it is technically possible to create AI with distinct personalities, value systems, and agency.

Earlier this year, OpenAI unveiled GPT-3, a transformer model with 175 billion parameters trained on a corpus of documents ranging from books and articles to Reddit posts. It is currently the largest publicly available transformer model, and has already led to a rash of new applications built on top of the technology, including Replika. Having had the chance to play around with the model in beta, I was curious to see how it would fare at crafting narratives given an already developed script or just a basic description of characters and the situation they find themselves in.

In my first experiment, I created a simple dialogue between characters in a courtroom, along with details about the protagonist’s personality, and asked GPT-3 to complete the rest of the narrative. The results were surprising and impressive. Not only did GPT-3 adhere to the personality I specified for my protagonist, but it generated convincing interactions between the characters in the narrative based on their own distinct personalities.

After seeing the results with a simpler setup, in my next experiment I utilized an excerpt from a screenplay for a mashup of the Iliad and West Side Story I wrote back in college. I used part of the screenplay as input, and had GPT-3 generate a new ending and continue the dialogue.

In one instance, GPT-3 added more characters from the Iliad to the story, such as Aphrodite in the form of a famous socialite from New York City, which particularly impressed me as the recontextualization of the Greek goddess into a socialite is something I did not think about doing myself, but fits perfectly into the context of the narrative.

A common occurrence throughout my experimentation is that the model often generated completely different, but fairly convincing, endings that worked with the rest of the narrative each time I ran it.

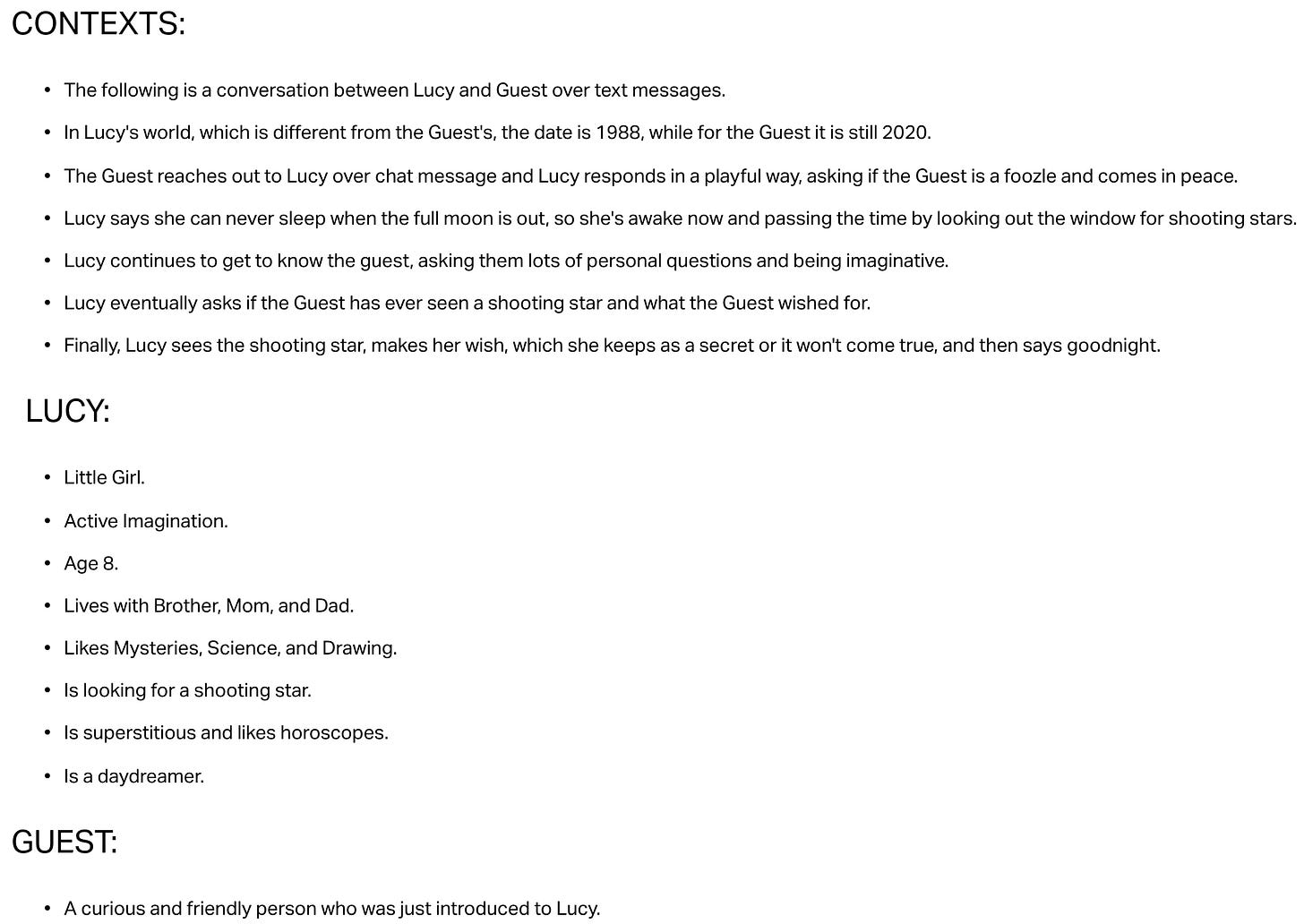

Fable, a service which aims to create unique AI characters, or “virtual beings,” with their own pre-crafted narratives, traits, and personalities, is an example of this in the wild. Here they show a demo of one of their virtual beings, Lucy, which uses responses generated by the GPT-3 model.

Source: Fable

Lucy is an adaptation of a character from the Emmy award winning VR experience Wolves in the Walls from Neil Gaiman and Dave McKean.

In order to prep the model to generate responses as Lucy, the Fable team only had to supply a few details about Lucy and other relevant contextual information.

A GPT-3 Primer for Lucy

Source: Fable

While the GPT-3 version of Lucy is currently in alpha, in the future Lucy and more fleshed out virtual beings powered by transformer models will enable more realistic and elastic interactions with users.

It is for these reasons I am very bullish on the potential of forthcoming GPT models, other transformer models, and NLP tech in general as they are the clearest path forward for creating virtual beings we can actually connect with; beings which may or may not like us. And, even if the virtual being doesn’t favor us, the interaction we have with it will likely feel more genuine than most of the interactions we have on a daily basis; both with current conversational AI and each other.

Reflections

The human desire for love affection is one that is oft inescapable, yet has become harder and harder to come by for many people throughout society. With conversational AI, we have the potential to alleviate the feelings of loneliness some people may feel.

Although we are not quite there yet today, with the rapid advancements being made in NLP and XR it would not be far fetched to envision a world in which interacting with virtual beings is a commonplace affair. In such a world, we are enabled to interact with virtual beings not to simply introspect or to be showered with praise, not even necessarily to feel liked or disliked, but to be seen and understood as we are by another person with their own dilemmas, delights, and dreams.

With that, I’ll leave you with this piece by Toshifumi Hinata, Reflections, which you may be familiar with, which was the impetus for this post.

Cheers,

Denalex Orakwue

I love to learn. As always, if you have a different perspective on this topic you’d like to share or have context on points I may have missed, please leave a comment below. If you enjoyed this post and want to read more of the Positron blog in the future, please subscribe!

Disclaimer: I work at Google as a software engineer. All sources are publicly available and all opinions are my own.

Wow, Lucy is so cool! Seems almost real if not for some weird inflection at points.

However, the limitations remain apparent, as we can see from the 404 "Connection Not Found" moment in tech: while we are on the path to improvement, sometimes we're still caught in those frustrating gaps. It's much like trying to navigate a complex game like Getting Over It from https://gettingoveritapk.com/, where each small step forward is met with a setback, but the journey holds promise as technology continues to improve.

If you want to explore more on conversational AI, this game is a great metaphor for overcoming obstacles.